39 learning with less labels

Learning With Less Labels - YouTube Human Activity Recognition: Learning with Less Labels and Privacy Preservation UCF CRCV 220 views 4 weeks ago Mix - UCF CRCV YouTube FixMatch: Simplifying Semi-Supervised Learning with Consistency... Learning With Less Labels (lwll) - mifasr - Weebly The Defense Advanced Research Projects Agency will host a proposer's day in search of expertise to support Learning with Less Label, a program aiming to reduce amounts of information needed to train machine learning models. The event will run on July 12 at the DARPA Conference Center in Arlington, Va., the agency said Wednesday.

DARPA Learning with Less Labels LwLL - Machine Learning and Artificial ... DARPA Learning with Less Labels LwLL - Machine Learning and Artificial Intelligence Sponsor Deadline: Oct 2, 2018 Letter of Intent Deadline: Aug 21, 2018 Sponsor: DOD Defense Advanced Research Projects Agency UI Contact: lynn-hudachek@uiowa.edu Updated Date: Aug 15, 2018 Email this DARPA Learning with Less Labels (LwLL) HR001118S0044

Learning with less labels

Learning in Spite of Labels Paperback - December 1, 1994 Item Weight : 2.11 pounds. Dimensions : 5.25 x 0.5 x 8.5 inches. Best Sellers Rank: #3,201,736 in Books ( See Top 100 in Books) #1,728 in Learning Disabled Education. #7,506 in Homeschooling (Books) Customer Reviews: 4.6 out of 5 stars. 6 ratings. Start reading Learning in Spite of Labels on your Kindle in under a minute . Darpa Learning With Less Label Explained - Topio Networks The DARPA Learning with Less Labels (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data needed to build the model or adapt it to new environments. In the context of this program, we are contributing Probabilistic Model Components to support LwLL. Less Labels, More Learning | AI News & Insights Less Labels, More Learning Machine Learning Research Published Mar 11, 2020 Reading time 2 min read In small data settings where labels are scarce, semi-supervised learning can train models by using a small number of labeled examples and a larger set of unlabeled examples. A new method outperforms earlier techniques.

Learning with less labels. Learning With Auxiliary Less-Noisy Labels - PubMed The proposed method yields three learning algorithms, which correspond to three prior knowledge states regarding the less-noisy labels. The experiments show that the proposed method is tolerant to label noise, and outperforms classifiers that do not explicitly consider the auxiliary less-noisy labels. Learning with Less Labels Imperfect Data | Hien Van Nguyen 1st Workshop on Medical Image Learning with Less Labels and Imperfect Data Program Why we organize Important Dates For Authors Organizers Program for Medical Image Learning with Less Labels and Imperfect Data (October 17, Room Madrid 5) 8:00-8:05 8:05-8:45 Opening remarks Keynote Speaker: Kevin Zhou, Chinese Academy of Sciences Human activity recognition: learning with less labels and privacy ... In this talk, I will discuss our recent work on human activity recognition employing learning with less labels. In particular, I will present our work employing Semi-supervised learning (SSL), self-supervise learning and zero-short learning. First, I will present our Uncertainty-aware Pseudo-label Selection (UPS) method for semi-supervised ... [2201.02627v1] Learning with less labels in Digital Pathology via ... Learning with less labels in Digital Pathology via Scribble Supervision from natural images Eu Wern Teh, Graham W. Taylor A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts.

[2201.02627] Learning with Less Labels in Digital Pathology via ... Jan 07, 2022 · Cross-domain transfer learning from NI to DP is shown to be successful via class labels. One potential weakness of relying on class labels is the lack of spatial information, which can be obtained from spatial labels such as full pixel-wise segmentation labels and scribble labels. What is Label Smoothing?. A technique to make your model less… | by ... A Concrete Example. Suppose we have K = 3 classes, and our label belongs to the 1st class. Let [a, b, c] be our logit vector.If we do not use label smoothing, the label vector is the one-hot encoded vector [1, 0, 0]. Our model will make a ≫ b and a ≫ c.For example, applying softmax to the logit vector [10, 0, 0] gives [0.9999, 0, 0] rounded to 4 decimal places. Learning with Less Labels in Digital Pathology via Scribble Supervision ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images Wern Teh, Eu ; Taylor, Graham W. A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. Notre Dame CVRL Towards Unsupervised Face Recognition in Surveillance Video: Learning with Less Labels To tackle re-identify people within different operation surveillance cameras using the existing state-of-the art supervised approaches, we need massive amount of annotated data for training. Training model with less human annotations is a though task while of ...

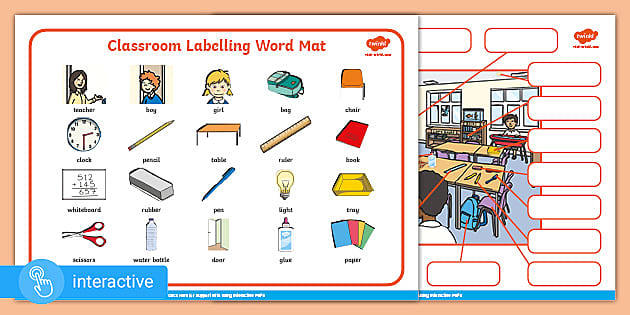

PDF Selective-Supervised Contrastive Learning With Noisy Labels less noisy, the learned representationswith this selective-supervised paradigmwill be more robust, naturally follow- ... The main contributions of this paper are summarized as three aspects: 1) We propose selective-supervised con-trastive learning with noisy labels, which can obtain robust pre-trained representations by effectively selecting ... Learning with Less Labeling (LwLL) - darpa.mil The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples. Learning with Less Labels and Imperfect Data | MICCAI 2020 This workshop aims to create a forum for discussing best practices in medical image learning with label scarcity and data imperfection. It potentially helps answer many important questions. For example, several recent studies found that deep networks are robust to massive random label noises but more sensitive to structured label noises. Printable Classroom Labels for Preschool - Pre-K Pages Good news, I made them for you! This printable set includes more than 140 different labels you can print out and use in your classroom right away. The text is also editable so you can type the words in your own language or edit them to meet your needs. To attach the labels to the bins in your centers, I love using the sticky back label pockets ...

Learning with Less Labels (LwLL) - Federal Grant Learning with Less Labels (LwLL): DARPA is soliciting innovative research proposals in the area of machine learning and artificial intelligence. Proposed research should investigate innovative approaches that enable revolutionary advances in science, devices, or systems.

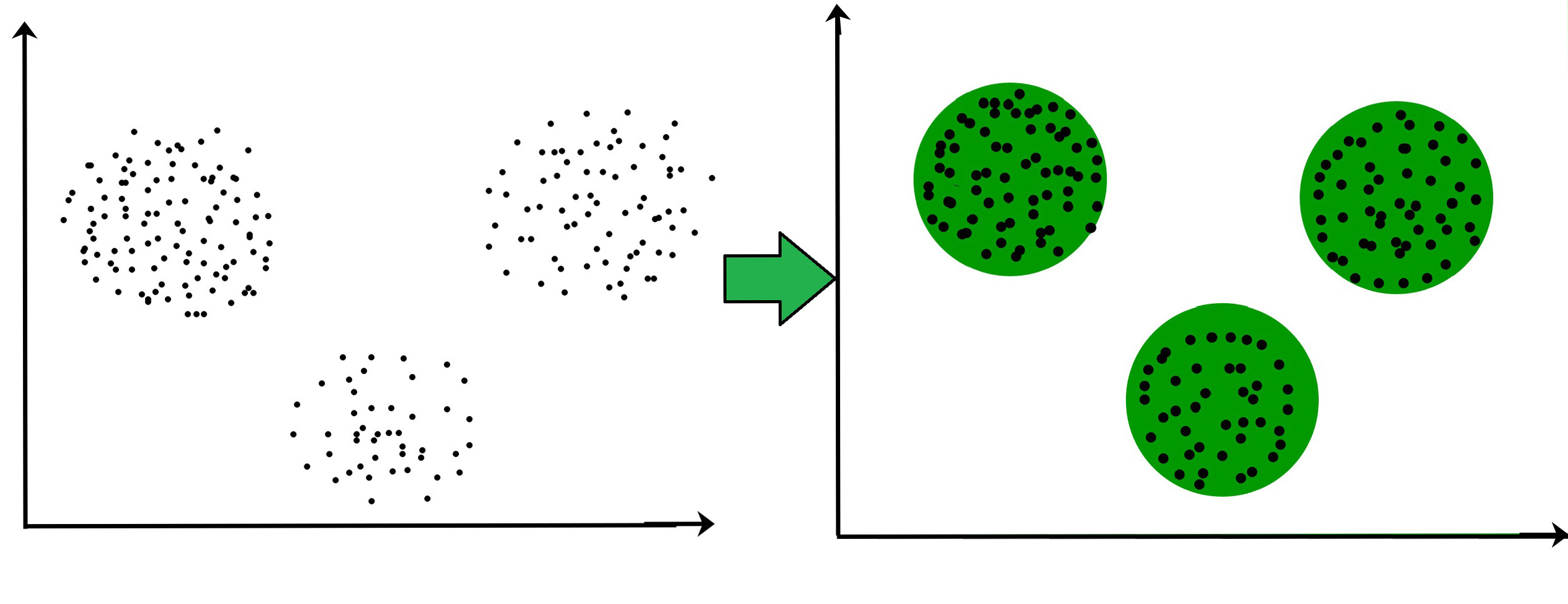

How do you learn labels with unsupervised learning? 2. Unsupervised methods usually assign data points to clusters, which could be considered algorithmically generated labels. We don't "learn" labels in the sense that there is some true target label we want to identify, but rather create labels and assign them to the data. An unsupervised clustering will identify natural groups in the data, and ...

Machine learning with less than one example - TechTalks A new technique dubbed "less-than-one-shot learning" (or LO-shot learning), recently developed by AI scientists at the University of Waterloo, takes one-shot learning to the next level. The idea behind LO-shot learning is that to train a machine learning model to detect M classes, you need less than one sample per class.

Pro Tips: How to deal with Class Imbalance and Missing Labels Classification with Missing Labels In addition to class imbalance, the absence of labels is a significant practical problem in machine learning. When only a small number of labeled examples are available, but there is an overall large number of unlabeled examples, the classification problem can be tackled using semi-supervised learning methods.

The Positves and Negatives Effects of Labeling Students "Learning ... The "learning disabled" label can result in the student and educators reducing their expectations and goals for what can be achieved in the classroom. In addition to lower expectations, the student may develop low self-esteem and experience issues with peers. Low Self-Esteem. Labeling students can create a sense of learned helplessness.

No labels? No problem!. Machine learning without labels using… | by ... These labels can then be used to train a machine learning model in exactly the same way as in a standard machine learning workflow. Whilst it is outside the scope of this post it is worth noting that the library also helps to facilitate the process of augmenting training sets and also monitoring key areas of a dataset to ensure a model is ...

Learning With Auxiliary Less-Noisy Labels - IEEE Xplore Learning With Auxiliary Less-Noisy Labels Published in: IEEE Transactions on Neural Networks and Learning Systems ( Volume: 28 , Issue: 7 , July 2017 ) Article #: Page (s): 1716 - 1721 Date of Publication: 06 April 2016 ISSN Information: Print ISSN: 2162-237X Electronic ISSN: 2162-2388 PubMed ID: 27071201 INSPEC Accession Number: 16970087

Learning with Less Labeling (LwLL) | Zijian Hu The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples.

Learning with Less Labels in Digital Pathology via Scribble Supervision ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images 7 Jan 2022 · Eu Wern Teh , Graham W. Taylor · Edit social preview A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts.

Learning with Less Labels (LwLL) | Research Funding In order to achieve the massive reductions of labeled data needed to train accurate models, the Learning with Less Labels program (LwLL) will divide the effort into two technical areas (TAs). TA1 will focus on the research and development of learning algorithms that learn and adapt efficiently; and TA2 will formally characterize machine learning problems and prove the limits of learning and adaptation.

LwFLCV: Learning with Fewer Labels in Computer Vision This special issue focuses on learning with fewer labels for computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, and many others and the topics of interest include (but are not limited to) the following areas: • Self-supervised learning methods • New methods for few-/zero-shot learning

Less Labels, More Learning | AI News & Insights Less Labels, More Learning Machine Learning Research Published Mar 11, 2020 Reading time 2 min read In small data settings where labels are scarce, semi-supervised learning can train models by using a small number of labeled examples and a larger set of unlabeled examples. A new method outperforms earlier techniques.

Darpa Learning With Less Label Explained - Topio Networks The DARPA Learning with Less Labels (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data needed to build the model or adapt it to new environments. In the context of this program, we are contributing Probabilistic Model Components to support LwLL.

Learning in Spite of Labels Paperback - December 1, 1994 Item Weight : 2.11 pounds. Dimensions : 5.25 x 0.5 x 8.5 inches. Best Sellers Rank: #3,201,736 in Books ( See Top 100 in Books) #1,728 in Learning Disabled Education. #7,506 in Homeschooling (Books) Customer Reviews: 4.6 out of 5 stars. 6 ratings. Start reading Learning in Spite of Labels on your Kindle in under a minute .

Post a Comment for "39 learning with less labels"