41 federated learning with only positive labels

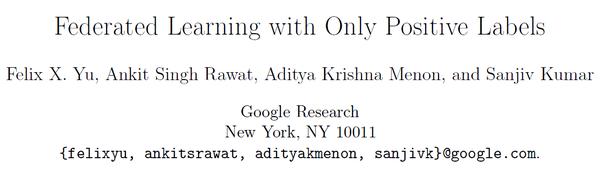

Papers with Code - Federated Learning with Only Positive Labels To address this problem, we propose a generic framework for training with only positive labels, namely Federated Averaging with Spreadout (FedAwS), where the server imposes a geometric regularizer after each round to encourage classes to be spreadout in the embedding space. Federated Learning from Only Unlabeled Data with... Supervised federated learning (FL) enables multiple clients to share the trained model without sharing their labeled data. However, potential clients might even be reluctant to label their own data, which could limit the applicability of FL in practice. In this paper, we show the possibility of unsupervised FL whose model is still a classifier for predicting class labels, if the class-prior ...

A survey on federated learning - ScienceDirect Yu et al. proposed a general framework for training using only positive labels, that is Federated Averaging with Spreadout (FedAwS), in which the server adds a geometric regularizer after each iteration to promote classes to be spread out in the embedding space. However, in traditional training, users also need to use negative tags, which ...

Federated learning with only positive labels

Positive and Unlabeled Federated Learning | OpenReview Therefore, existing PU learning methods can be hardly applied in this situation. To address this problem, we propose a novel framework, namely Federated learning with Positive and Unlabeled data (FedPU), to minimize the expected risk of multiple negative classes by leveraging the labeled data in other clients. Federated learning with only positive labels and federated deep ... A Google TechTalk, 2020/7/30, presented by Felix Yu, GoogleABSTRACT: Federated learning with only positive labels - Google Research To address this problem, we propose a generic framework for training with only positive labels, namely Federated Averaging with Spreadout (FedAwS), where the server imposes a geometric regularizer after each round to encourage classes to be spreadout in the embedding space.

Federated learning with only positive labels. Federated Learning with Only Positive Labels | Request PDF - ResearchGate Federated Learning with Only Positive Labels Authors: Felix X. Yu Ankit Singh Rawat Google Inc. Aditya Krishna Menon Sanjiv Kumar IFTM University Abstract We consider learning a multi-class... Federated Learning with Only Positive Labels Rawat; Ankit Singh ; et al ... Federated Learning with Only Positive Labels Abstract. Generally, the present disclosure is directed to systems and methods that perform spreadout regularization to enable learning of a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a limited number of classes (e.g ... Federated Learning with Positive and Unlabeled Data | DeepAI Therefore, existing PU learning methods can be hardly applied in this situation. To address this problem, we propose a novel framework, namely Federated learning with Positive and Unlabeled data (FedPU), to minimize the expected risk of multiple negative classes by leveraging the labeled data in other clients. Federated Learning with Positive and Unlabeled Data Federated learning with only positive labels. In International Conference on Machine Learning, pages 10946-10956. PMLR, 2020. Benchmarking semi-supervised federated learning. Jan 2020;

Federated Learning with Only Positive Labels | DeepAI To address this problem, we propose a generic framework for training with only positive labels, namely Federated Averaging with Spreadout (FedAwS), where the server imposes a geometric regularizer after each round to encourage classes to be spreadout in the embedding space. developers.google.com › machine-learning › glossaryMachine Learning Glossary | Google Developers Oct 28, 2022 · 1,000,000 negative labels; 10 positive labels; The ratio of negative to positive labels is 100,000 to 1, so this is a class-imbalanced dataset. In contrast, the following dataset is not class-imbalanced because the ratio of negative labels to positive labels is relatively close to 1: 517 negative labels; 483 positive labels Federated Learning with Only Positive Labels We consider learning a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a single class. As a result, during each federated learning round, the users need to locally update the classifier without having access to the features and the model parameters for the negative ... Federated Learning with Only Positive Labels Abstract: We consider learning a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a single class. As a result, during each federated learning round, the users need to locally update the classifier without having access to the features and the model parameters for the negative labels.

US20210326757A1 - Federated Learning with Only Positive Labels - Google ... Generally, the present disclosure is directed to systems and methods that perform spreadout regularization to enable learning of a multi-class classification model in the federated setting,... Federated Learning with Only Positive Labels: Paper and Code Federated Learning with Only Positive Labels. Click To Get Model/Code. We consider learning a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a single class. As a result, during each federated learning round, the users need to locally update the classifier without having access to the features and the model ... › teachers › teaching-toolsArticles - Scholastic Article. How to Create a Culture of Kindness in Your Classroom Using The Dot and Ish. Use these classic books and fun activities to encourage your students to lift one another up — and to let their natural creativity run wild! Federated learning with only positive labels | Proceedings of the 37th ... Federated learning with only positive labels. Authors: Felix X. Yu. Google Research, New York ...

Federated Learning with Only Positive Labels - Papers With Code Federated Learning with Only Positive Labels . We consider learning a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a single class. As a result, during each federated learning round, the users need to locally update the classifier without having access to the ...

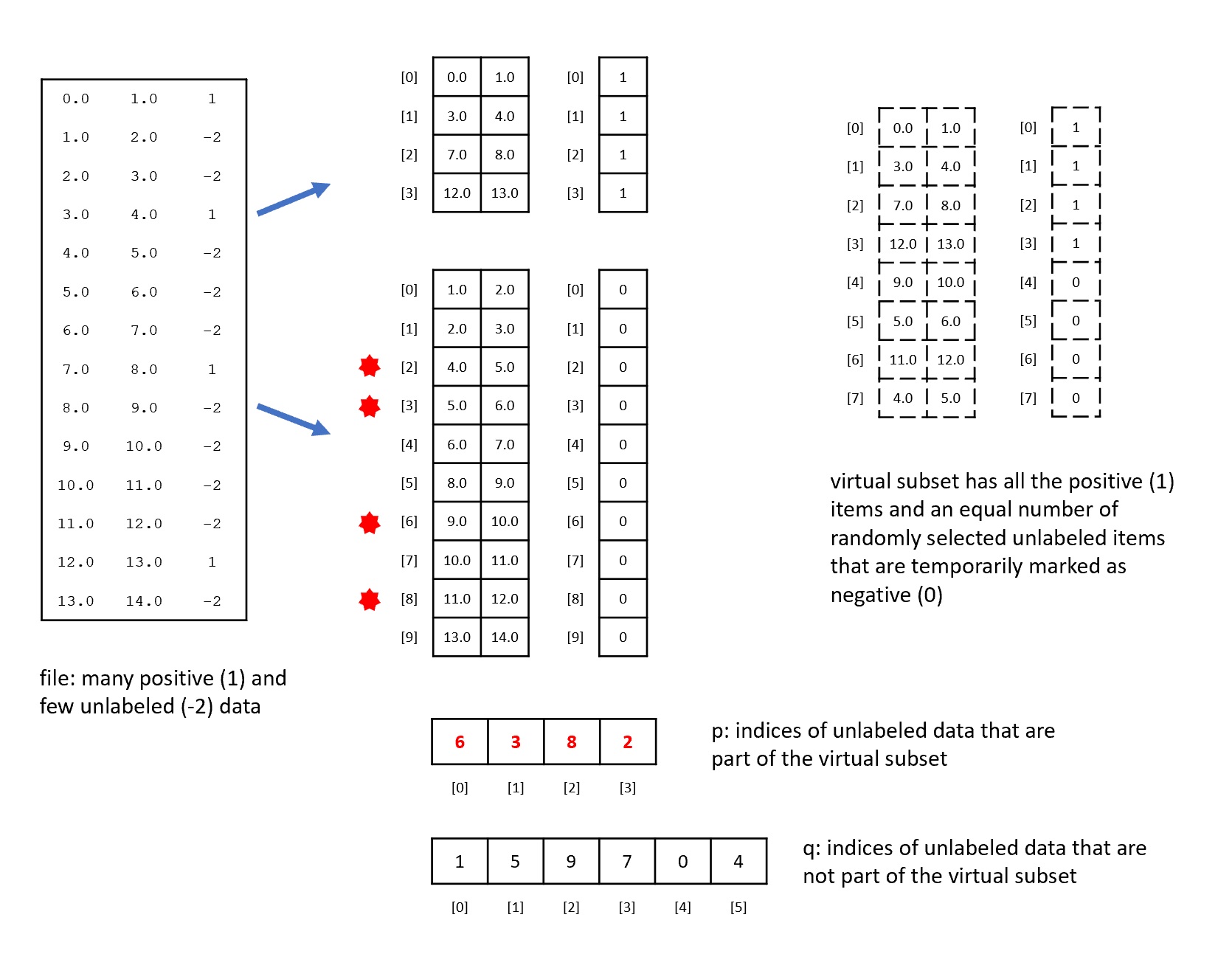

Federated Learning with Only Positive Labels - arxiv-vanity.com 3.2 Federated Learning with only positive labels In this work, we consider the case where each client has access to only the data belonging to a single class. To simplify the notation, we assume that there are m=Cclients and the i-th client has access of the data of the i-th class.

Reading notes: Federated Learning with Only Positive Labels Authors consider a novel problem, federated learning with only positive labels, and proposed a method FedAwS algorithm that can learn a high-quality classification model without negative instance on clients Pros: The problem formulation is new. The author justified the proposed method both theoretically and empirically.

› news-releases › news-releases-listAll News Releases and Press Releases from PR Newswire All News Releases. A wide array of domestic and global news stories; news topics include politics/government, business, technology, religion, sports/entertainment, science/nature, and health ...

Federated learning with only positive labels - Google Research To address this problem, we propose a generic framework for training with only positive labels, namely Federated Averaging with Spreadout (FedAwS), where the server imposes a geometric regularizer after each round to encourage classes to be spreadout in the embedding space.

Federated learning with only positive labels and federated deep ... A Google TechTalk, 2020/7/30, presented by Felix Yu, GoogleABSTRACT:

Positive and Unlabeled Federated Learning | OpenReview Therefore, existing PU learning methods can be hardly applied in this situation. To address this problem, we propose a novel framework, namely Federated learning with Positive and Unlabeled data (FedPU), to minimize the expected risk of multiple negative classes by leveraging the labeled data in other clients.

![PDF] Benchmarking Semi-supervised Federated Learning ...](https://d3i71xaburhd42.cloudfront.net/6ccab5a0293bb153bb21dace7c5a9edba3fbdd1a/4-Figure1-1.png)

Post a Comment for "41 federated learning with only positive labels"